Data-driven Sketch Interpretation(study notes)

Date:

This is a study note for data-driven sketh interpretation talk by Prof. FU Hongbo

Data-driven Sketch Interpretation

ill-posed, requiring additional cues beyond input 2D sketches, such cues might be in existing sketch /image/model data to data-driven approaches

- What is a sketch represent: sketch recognition

- What components constitute a sketched object: sketch segmentation & lableling

- What is underlying 3D shape: 3D interpretation

- What is the underlying photo-realistic object: sketch-based image generation

useful links

- dataset:

QuickDraw dataset, 50 millions of doodles

Data-driven Sketch Recognition

Introduce deep learning method -> optimize input from rasterized bitmap to vector -> optimize input from individual object to context

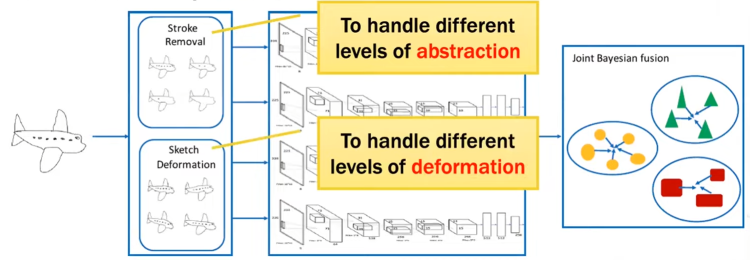

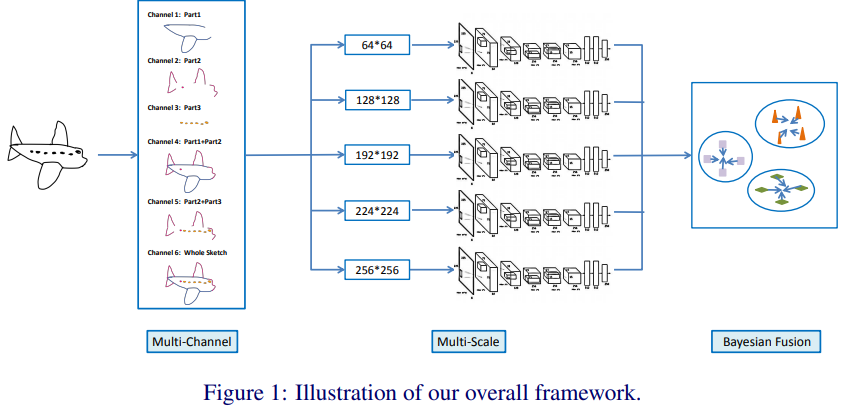

»> Sketch-a-Net [arxiv][paper] by Yu et al. [IJCV 2017], CNN model

stroke removal: handle different levels of abstraction

sketch deformation: handle different levels of deformation

accuracy: 77.95% on TU-Berlin, surpassing human performance([Eitz etal. SIGGRAPH 2012])

Key features:

- a number of model architecture and learning parameter choices specifically for addressing the iconic and abstract nature of sketches;

- a multi-channel architecture designed to model the sequential ordering of strokes in each sketch;

- a multi-scale network ensemble to address the variability in abstraction and sparsity, followed by a joint Bayesian fusion scheme to exploit the complementarity of different scales;

Contributions:

- for the first time, a representation learning model based on DNN is presented for sketch recognition in place of the conventional hand-crafted feature based sketch representations;

- we demonstrate how sequential ordering information in sketches can be embedded into the DNN architecture and in turn improve sketch recognition performance;

- we propose a multi-scale network ensemble that fuses networks learned at different scales together via joint Bayesian fusion to address the variability of levels of abstraction in sketches

Extensive experiments on the largest hand-free sketch benchmark dataset, the TU-Berlin sketch dataset, show that our model significantly outperforms existing approaches and can even beat humans at sketch recognition.

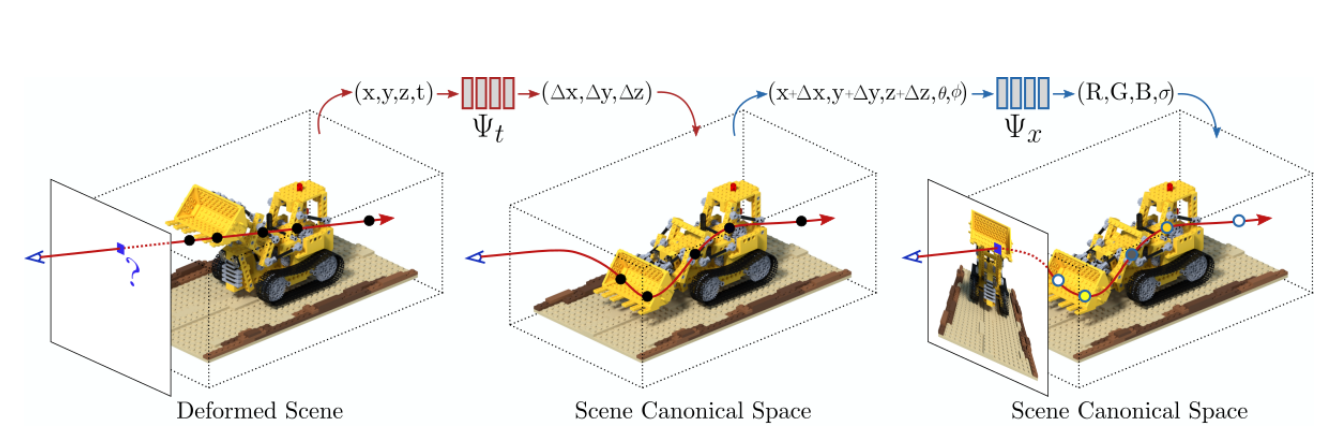

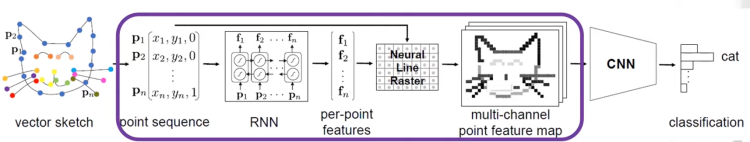

»> Sketch-R2CNN [arxiv][paper] by Li et al [TVCG]

An RNN-Rasterization-CNN architecture for vector sketch recognition

Use an RNN for extracting per-point features in the vector space. Introduce a differentiable Neural Line Rasterization module to connect the vector space and the pixel splace for end-to-end learning

To recognize a sketched object, most existing methods discard such important temporal ordering and grouping information from human and simply rasterize sketches into binary images for classification.

Key features:

- To bridge the gap between these two spaces in neural networks, we propose a neural line rasterization module to convert the vector sketch along with the attention estimated by RNN into a bitmap image, which is subsequently consumed by CNN

- uses an RNN for stroke attention estimation in the vector space

- the neural line rasterization module is designed in a differentiable way to yield a unified pipeline for end-to-end learning

- a CNN for 2D feature extraction in the pixel space respectively

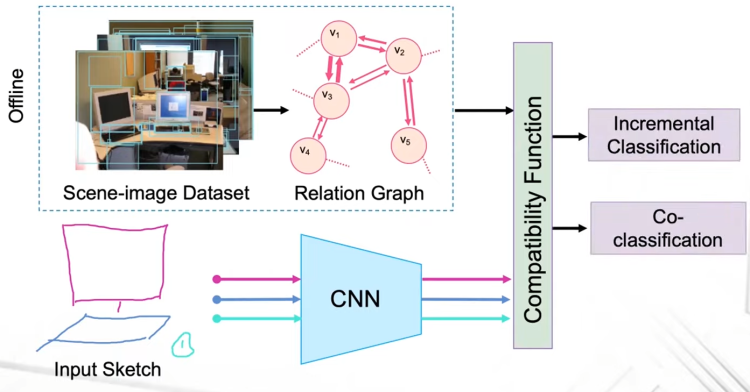

»> Context-based Sketch Classification [paper] by Zhang et al. [Expressive 2018]

individual object can be ambiguous to recognize, context information can be very useful

Scene can be split into object but we dont know their lables. By CNN and realistic photos’ relation graph ,we can use compatibility function to help the co-classification

To this end, we need to solve two main challenges.

Firstly the extraction of relation priors on categories of sketched objects requires voluminous sketch data. Unfortunately, there is a lack of large-scale datasets of sketched scenes at present. Existing sketch datasets, such as [7, 12, 32], only comprise drawings of single objects. We resort to existing image datasets that contain rich annotations of objects in real-world scenes, and we show that it is a viable solution to transfer and apply the learned relation priors across different domains (i.e., image to sketch).

The other challenge is to identify and quantify relations that are effective for ambiguity resolution, and then to unify them.

Contributions:

extracting and transferring relation priors from the image domain to the sketch domain to alleviate recognition ambiguity in sketches;

a context-based sketch classification framework with two specific algorithms for different sketching scenarios, each achieving higher accuracy than the state-of-the-art CNN for single-object classification;

a new dataset of scene sketches for benchmarking the performance of relevant recognition algorithms.

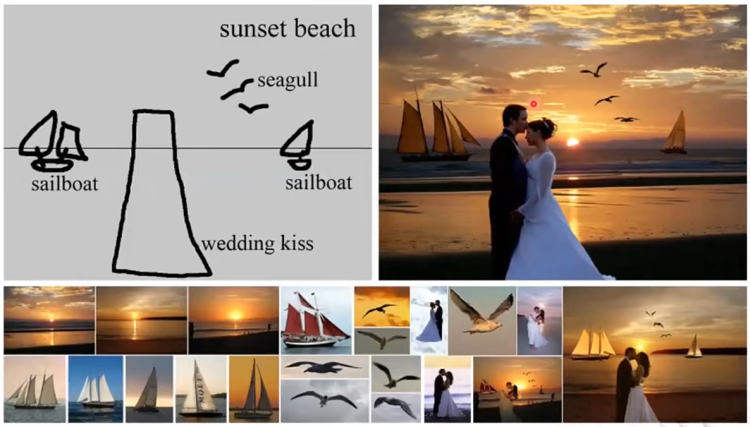

»> Courtesy of Chen et al. [SIGGRAPH Asia 2009]

Potential application: from sketch to photo

Sketch Segmentation and Labeling

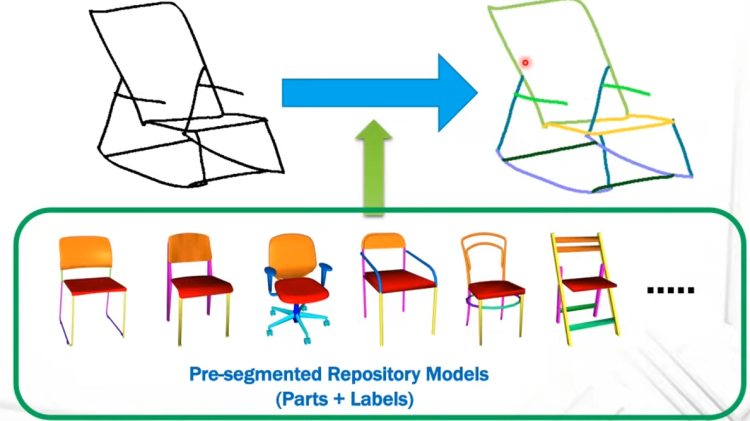

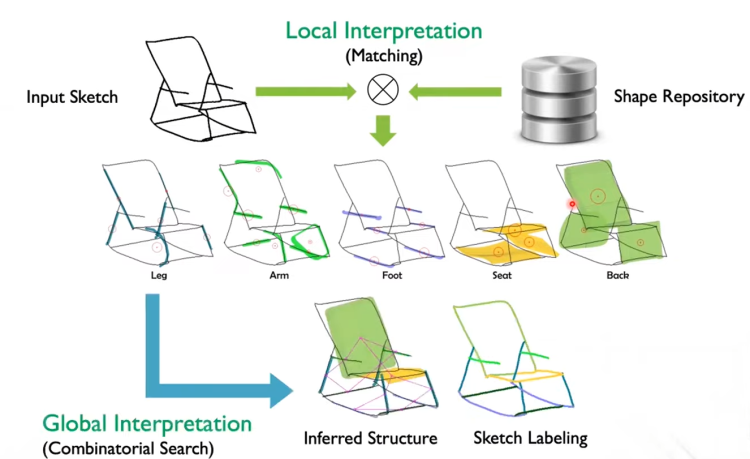

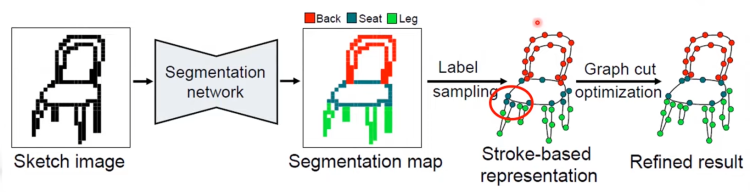

»> Data-driven Segmentation and Labeling for Freehand Sketches [paper] by Huang et al. [SIGGRAPH Asia 2014]

Transfer the segmentations from 3d models into freehand sketches

Without any semantic segmentation information as input, and aim at deriving semantically meaningful part-level structures, which leads to solve two challenging interdependent problems:

segmenting input possibly sloppy sketches into semantically meaningful components (sketch segmentation)

and recognizing the categories of individual components (sketch recognition).

Straightforward method shortcommings:

Direct retrieval approach: whether such a desired model is in a repository of moderate size. In addition, freehand sketches have inherent distortions. Therefore even if such a model indeed exists, it would be difficult to find a desired global camera projection due to the differences in pose and proportion between the sketched object and the 3D model.

This might over-constrain the search space of candidate parts. In addition, this approach also over-constrain the number of candidate parts for each category. Consequently, there might exist no suitable candidates for certain parts

»> Data-driven Approaches for Sketch Segmentation & Labeling [paper] by Li et al. [Computer Graphics and Applications, 2019]

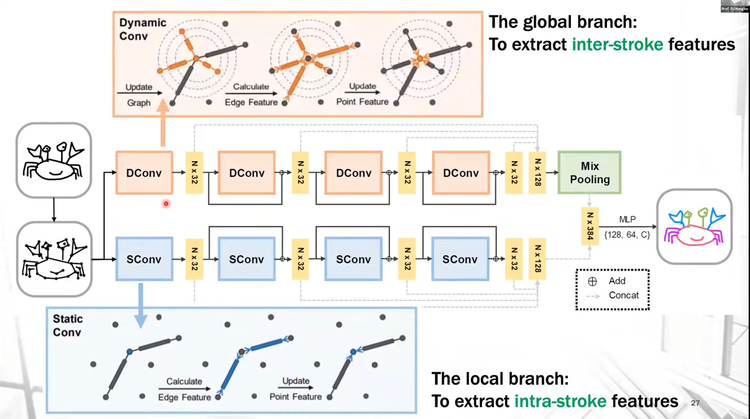

»> SketchGNN: Semantic Sketch Segmentation with GNNs [arxiv][paper] by Yang et al. [TOG 2021]

The first GNN-based approach for this task.

3D Interpretations of Sketches

Reconstruction-based

- Use sketches as hard constraints

- Employ a less constrained shape space

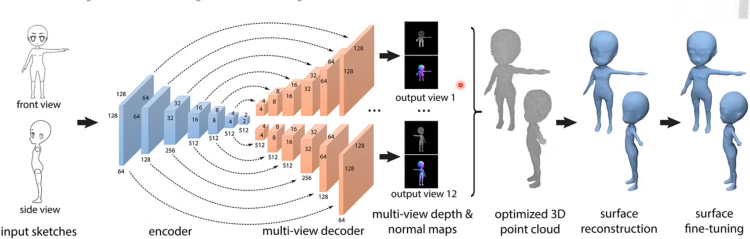

»> 3D Shape Reconstruction from Sketches via Multi-view Convolutional Networks [arxiv][paper] by Lun etal. [3DV 2017]

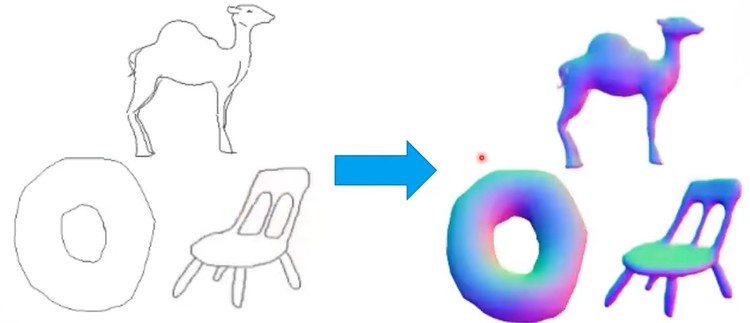

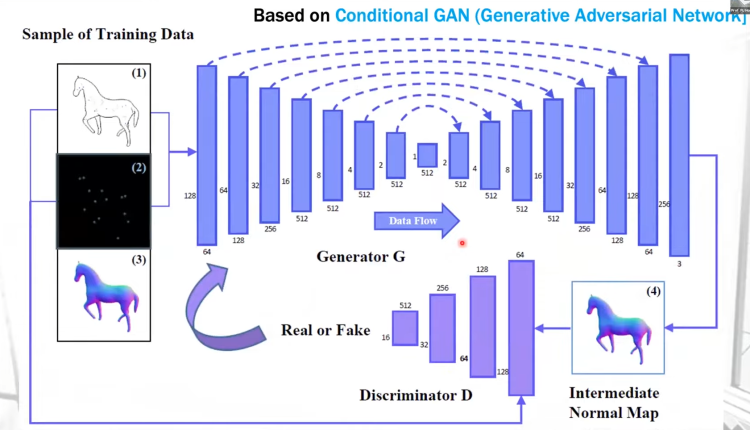

»> Interactive Sketch-Based Normal Map Generation with Deep Neural Networks [paper] by Su et al. [i3D 2018]

Approximatio-based

- Use sketches as soft constrains

- Employ a more constrained shape space

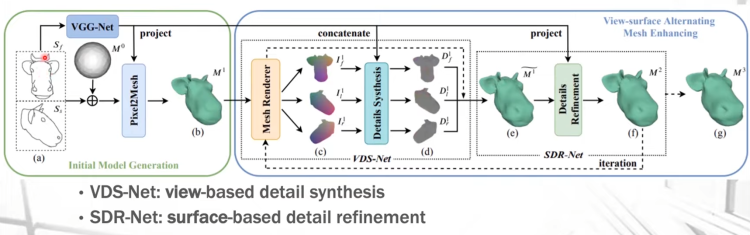

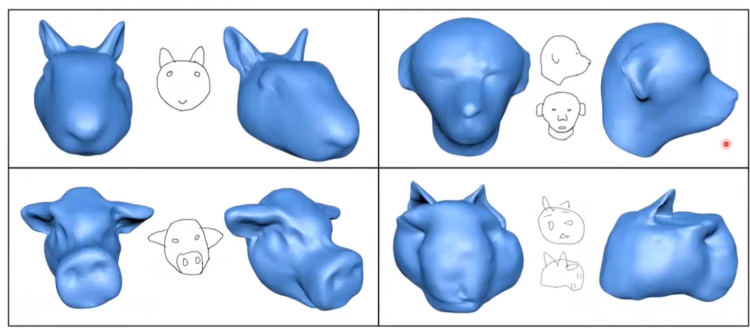

»> SAniHead [project] by Du et al. [TVCG 2020]

Shape space: largely constrained by the deformation o a template mesh, Less sensitive to erros in input sketches

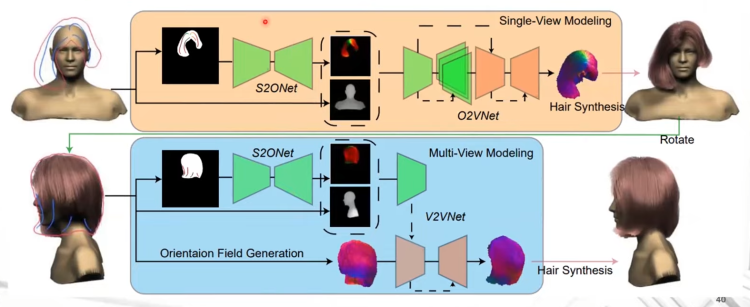

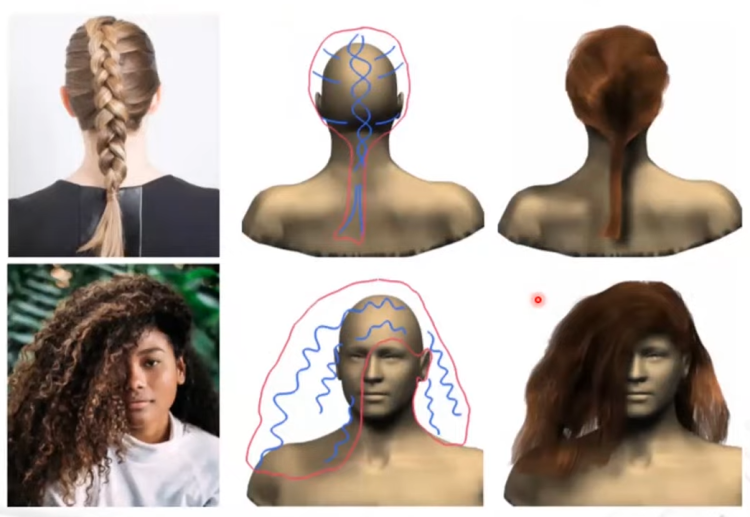

»> Deep Sketch Hair [arxiv][paper] by Shen et al. [TVCG 2020]

Difficult to synthesize hair details due to the adopted orientation fields

Sketch-based Image Generation

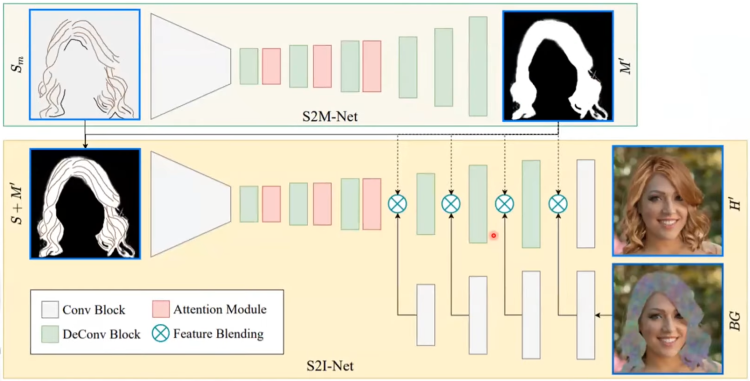

»> Sketch Hair Salon [project][paper] by Xiao et al. [SIGGRAPH Asia 2021]

A traditional pipeline for sketch-based hair synthese/modeling:

sketch -> orientation map -> hair image/model

Using Orientation maps are not very effective to encode complex hair structures, the stroke generated from orientation maps are difficult to get the meaningful sketches Instead of using orientation map, we try to predict the hair images directly from the hair sketch

S2M-Net: predict the hair mask

Using dataset: 4.5K hair image-sketch pairs

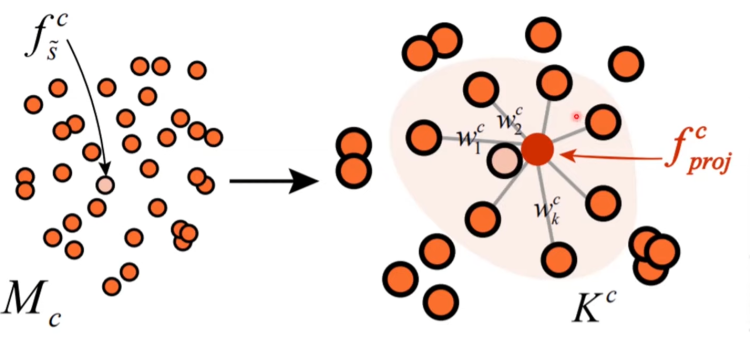

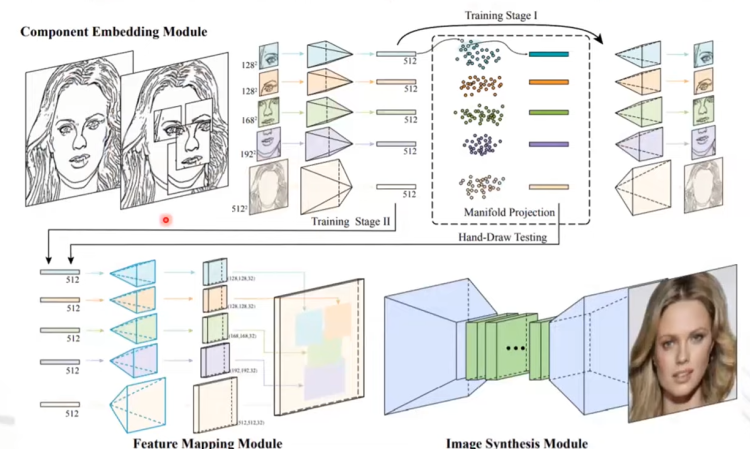

»> Deep Generation of Face Images from Sketches [paper] by Chen et al. [SIGGRAPH 2020]

Generate photo-realistic face image from sketches.

Use the manifold projection: we assume the space is locally linear that we can perform locally embedding to refine a input sketch. Given a input feature vector corresponding to the input sketch, i.e. face component, we retrive the component and perform the interpolation

Challenges and Opportunities

- Sketch datasets, we need larger scale, multi-view, different levels of abstraction and deformation, scele-level, targeted for specific applications

- Learning models specialized for sketches, sparsity, sequential, stroke structure

- Sketch + time. sketch animation, sketch-based 3D animation, sketch based video editing

- 3D sketches, 3D modelling, 3D animation