2023 SIGGRAPH Paper Select

Date:

Full list of papers: https://kesen.realtimerendering.com/sig2023.html

Some interested paper in 2023 SIGGRAPH. Including NeRF Rendering, tbd…

Selected paper in 2023 SIGGRAPH

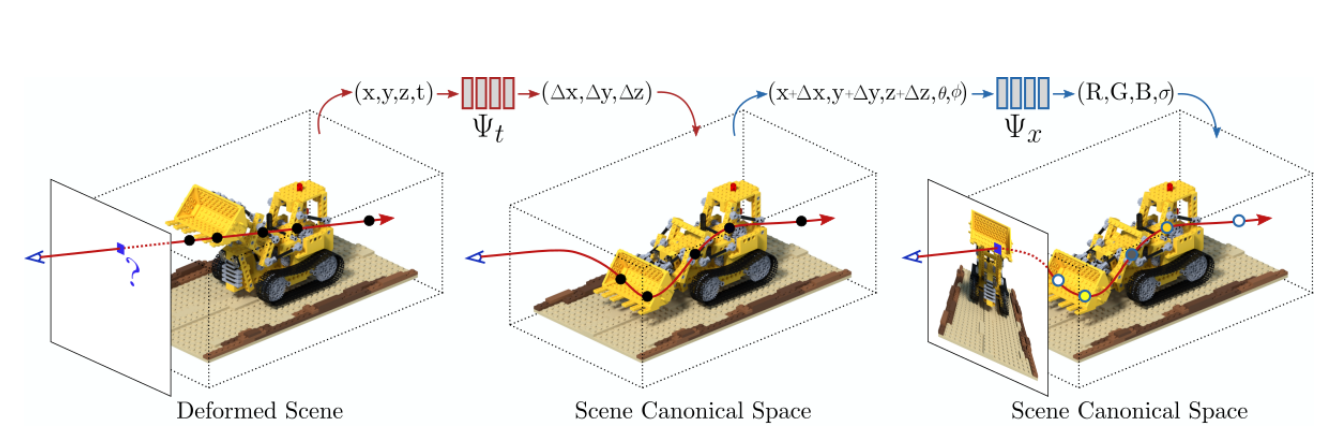

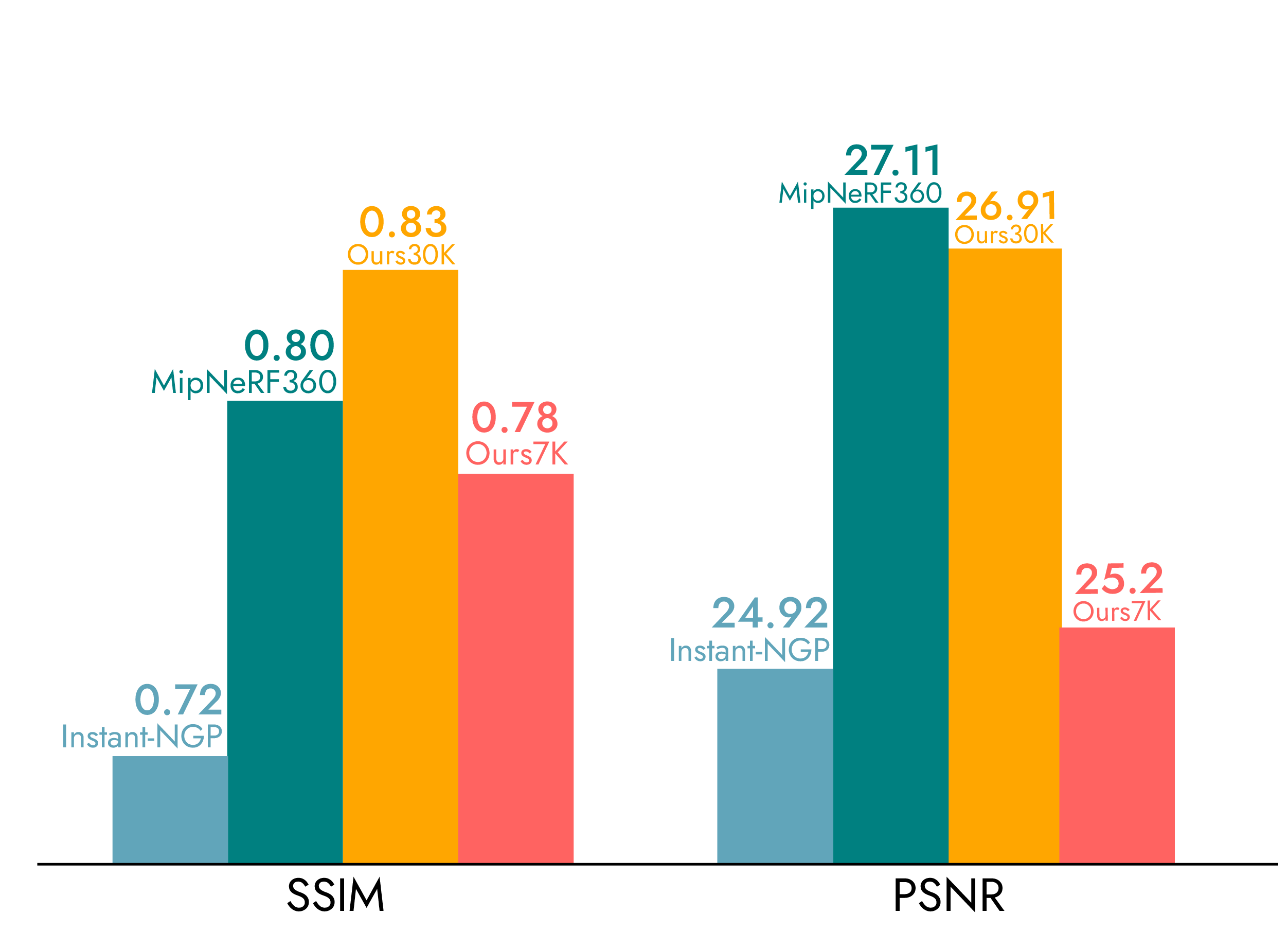

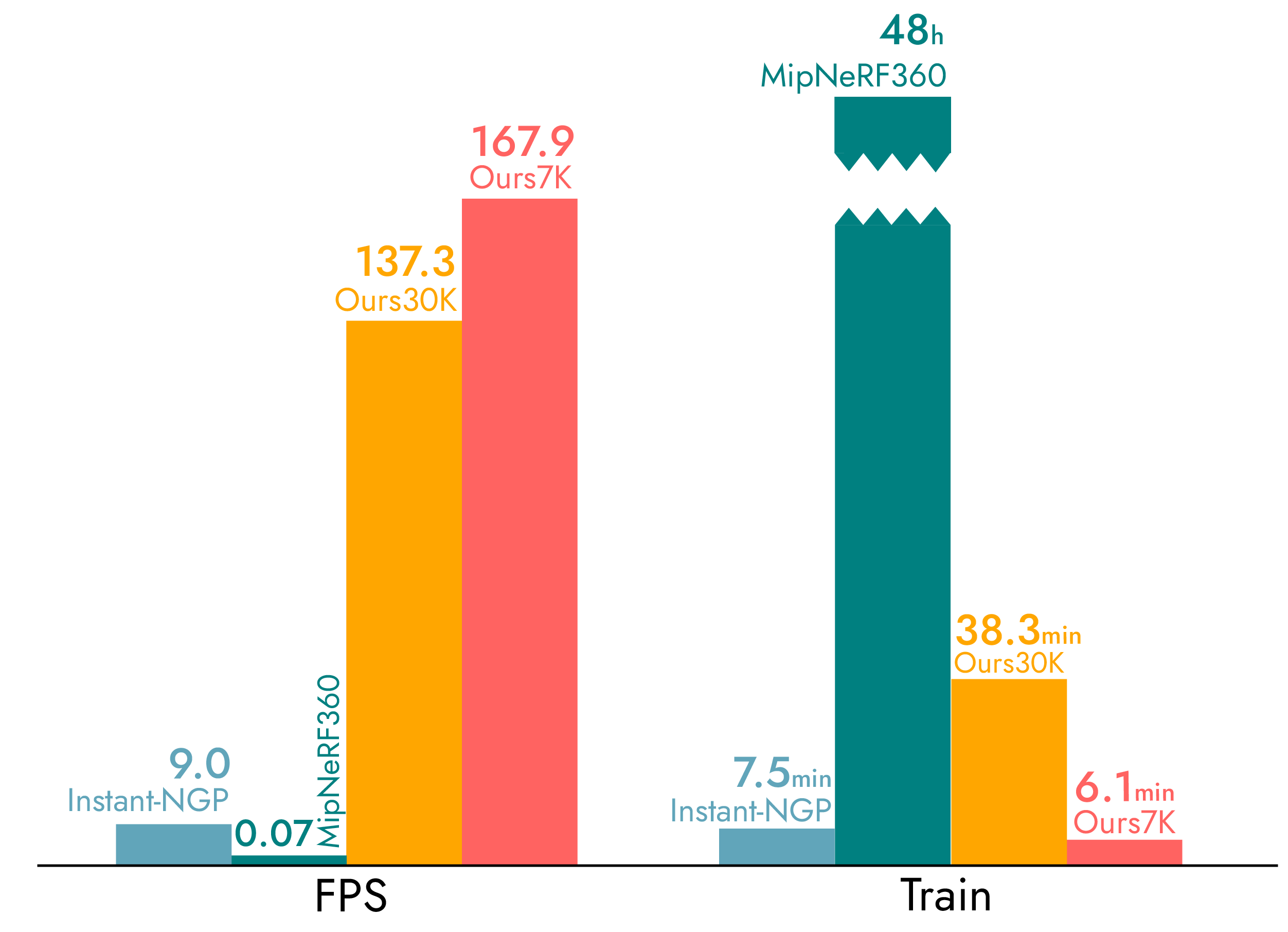

3D Gaussian Splatting for Real-time Radiance Field Rendering

Highlight result:

The new technique enables real-time display of radiance fields with impressive visual quality, achieving a rendering rate of at least 30 frames per second. The researchers depict scenes using precise 3D Gaussians, facilitating efficient optimization processes.

The inclusion of visibility-aware rendering accelerates training, matching the speed of the fastest prior methods while maintaining comparable quality. Additionally, just one extra hour of training enhances the output to a state-of-the-art level of quality.

“First, starting from sparse points produced during camera calibration, we represent the scene with 3D Gaussians that preserve desirable properties of continuous volumetric radiance fields for scene optimization while avoiding unnecessary computation in empty space; Second, we perform interleaved optimization/density control of the 3D Gaussians, notably optimizing anisotropic covariance to achieve an accurate representation of the scene; Third, we develop a fast visibility-aware rendering algorithm that supports anisotropic splatting and both accelerates training and allows realtime rendering. We demonstrate state-of-the-art visual quality and real-time rendering on several established datasets.”

OctFormer: Octree-based Transformers for 3D Point Clouds

Single-author paper

3D segmentation work, work on ScanNet 2000 Dataset, compared with Sparse-Voxel-Based CNN.

Previous work using transformers for 3D point clouds problem, including global transformers, window transformers, downsampled transformers, neighborhood transformers, have their own instinct problem.

Goal:

Scalable, to overcome the $O(N^2)$ complexity problem.

Unified network, solve all 3D understanding tasks

Sparse Non-parametric BRDF Model

Non-parametric BRDF Model: presented in Bagher et al., 2016. Using data-driven models to represent material BRDF instead of carefully modeled parametric encoded BRDFs. Data driven models are superior to parametric models in that the number of degrees of freedom, or implicit model parameters, is much higher

Using SNR to compare with previous work, and win higher score.

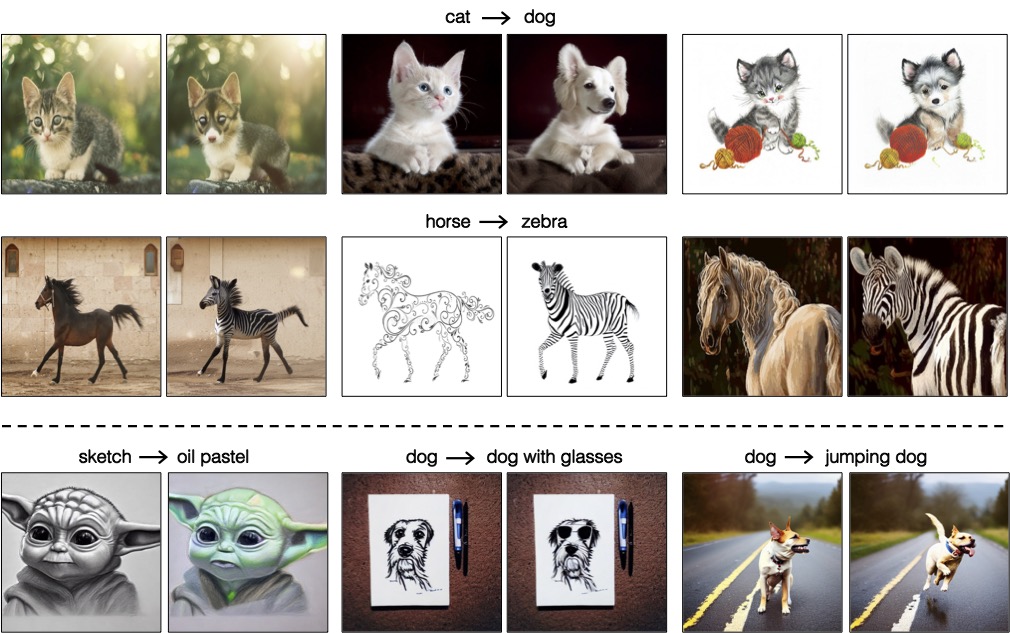

Zero-shot Image-to-Image Translation

Comparisons:

SDEdit + word swap: this method first stochastically adds noise to an intermediate timestep and subsequently denoises with the new text prompt, where the source word is swapped with the target word.

Prompt-to-prompt (concurrent work): we use the officially released code. The method swaps the source word with the target and uses the original cross-attention map as a hard constraint.

DDIM(Denoising Diffusion Implicit Models) + word swap: we invert with the deterministic forward DDIM process and perform DDIM sampling with an edited prompt generated by swapping the source word with the target

Computational Long Exposure Mobile Photography

Interesting algorithm, a computational burst photography system that operates in a hand-held smartphone camera app, and achieves these effects fully automatically, at the tap of the shutter button. Unfortunately, Source code not released

Example:

Steps:

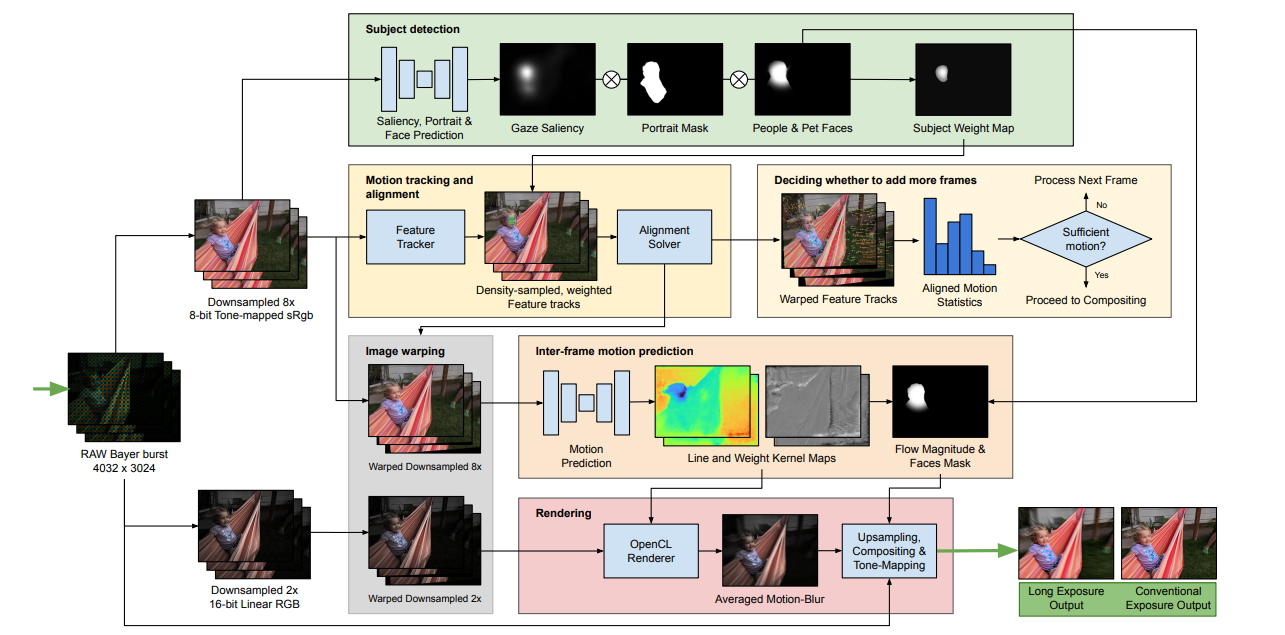

Our approach first detects and segments the salient subject. We track the scene motion over multiple frames and align the images in order to preserve desired sharpness and to produce aesthetically pleasing motion streaks.

We capture an under-exposed burst and select the subset of input frames that will produce blur trails of controlled length, regardless of scene or camera motion velocity

We predict inter-frame motion and synthesize motion-blur to fill the temporal gaps between the input frames

Finally, we composite the blurred image with the sharp regular exposure to protect the sharpness of faces or areas of the scene that are barely moving, and produce a final high resolution and high dynamic range (HDR) photograph

Inverse Global Illumination using a Neural Radiometric Prior

Inverse Rendering and Differential Rendering, read later, tbd.

3DShape2VecSet: A 3D Shape Representation for Neural Fields and Generative Diffusion Models

Point cloud to 3D mesh? Seems great improvement, tbd.